At ISPRS GSW 2025, we are excited to offer four tutorials carefully crafted to align with the conference theme, “Photogrammetry and Remote Sensing for a Better Tomorrow.” These tutorials will take place on Sunday, April 6th, 2025, providing a dedicated day for in-depth learning and skill development. Each tutorial will run for 3 hours, divided into two 1.5-hour sessions with a 30-minute break in between.

The tutorials will cover topics spanning photogrammetry, remote sensing, spatial sciences, and related technologies, ensuring participants gain a well-rounded understanding of the field. Designed to address recent advancements, methodologies, tools, and practical applications, these sessions will equip attendees with the knowledge needed to tackle current challenges and innovate for the future. Whether you are a researcher, practitioner, or student, these tutorials promise an engaging and enriching learning experience.

Tutorial Registration:

Tutorial Cancellation policy:

6th April 2025 | 09:00 – 12:30 | Ajman (A) Hall

Presented By: Ayman F. Habib, Thomas A. Page Professor in Civil Engineering, Lyles School of Civil and Construction Engineering, Purdue University, USA

Overview: The continuous developments in direct geo-referencing technology (i.e., integrated Global Navigation Satellite Systems – GNSS – and Inertial Navigation Systems – INS) and remote sensing systems (i.e., passive and active imaging sensors in the visible and infrared range – RGB cameras, hyperspectral push-broom scanners, and laser scanning) are providing the professional geospatial community with ever-growing opportunities to generate accurate 3D information with rich set of attributes. These advances are also coupled with improvement in the sensors’ performance, reduction in the associated cost, and miniaturization of such sensors. Aside from the sensing systems, we are also enjoying the emerging of promising platforms such as Uncrewed Aerial Vehicles (UAVs). The tutorial will involve two practical exercises. The first one deals with the interpretation of a quality report derived from a generic Structure from Motion (SfM) for a block of UAV images. The second exercise will deal with the inspection of point cloud data to infer the presence of random and systematic errors.

Objectives and Learning Outcomes: This tutorial will provide an overview of recent activities focusing on UAV-based mapping while highlighting:

Target audience and prerequisites: The tutorial is targeted for researchers and practitioners interested in using uncrewed aerial vehicles for near proximal sensing using directly georeferenced imagery and/or LiDAR data for cost-effective mapping to address the needs of various applications (e.g., transportation, agriculture, forestry, public safety, and infrastructure monitoring). The basic principles of photogrammetric and LiDAR mapping will be covered. The audience should have some background in using geospatial data and products from imaging and ranging systems.

Technical requirements (software, hardware, etc.): Trial version of PIX4Dmapper for the first practical exercise and CloudCompare for the second one. A laptop with reasonable processing power will be sufficient. Wireless or wired internet connection is required.

Brief biography of the instructor: Ayman Habib is the Thomas A. Page Professor at the Lyles School of Civil Engineering at Purdue University. He is the Co-Director of the Civil Engineering Center for Applications of UAS for a Sustainable Environment (CE-CAUSE). He is also the Associate Director of Purdue University’s Joint Transportation Research Program (JTRP) and Institute of Digital Forestry (iDiF). He is a Fellow of the American Society for Photogrammetry and Remote Sensing. He received the M.Sc. and Ph.D. degrees in photogrammetry from The Ohio State University, Columbus, OH, USA, in 1993 and 1994, respectively. His research interests include the fields of terrestrial and aerial mobile mapping systems using photogrammetric and LiDAR remote sensing modalities, UAV-based 3D mapping, and integration of multi-modal, multi-platform, and multi-temporal remote sensing data for applications in transportation, infrastructure monitoring, environmental protection, precision agriculture, digital forestry, resource management, and archeology.

Dr. Habib has authored more than 500 publications, including one book and more than 160 peer-reviewed journal papers. He has supervised more than 30 PhD students to completion. He is the recipient of several awards such as the Duane C. Brown Senior Award from The Ohio State University (1997); Talbert Abrams “Grand Awards” from the American Society of Photogrammetry and Remote Sensing (ASPRS) in 2002, 2008, 2016, and 2024; Talbert Abrams “First Honorable Mention” from the ASPRS in 2008 and 2022; Talbert Abrams “Second Honorable Mention” from the ASPRS in 2004, Best Paper Award from the International Society of Photogrammetry and Remote Sensing (ISPRS) in 2004; President’s Citation Award from the ISPRS in 2012; the ASPRS Photogrammetric Fairchild Award in 2011; Purdue’s Most Impactful Faculty Inventors in 2019 and 2021 for starting companies based on College of Engineering intellectual property; 2018 Purdue’s Research Foundation (PRF) Innovator Hall of Fame award; Purdue’s Seed for Success Award in 2016 and 2020; 2017 Outstanding Achievement Award presented at the 10th International Conference on Mobile Mapping Technology in recognition of pioneering contributions in developing and promoting Mobile Mapping Technology.

6th April 2025 | 09:00 – 12:30 | Ajman (D) Hall

Overview: This tutorial provides a hands-on approach to urban monitoring and analysis using remote sensing and spatial information technology. Participants will explore key concepts like urban heat islands, local climate zones, urban air quality, and spatial patterns. Through guided exercises and case studies attendees will learn practical techniques for urban data extraction, classification, and spatial analysis. The session is ideal for those with GIS skills, basic programming knowledge, and an active Google Earth Engine account.

Objectives and Learning Outcomes : The tutorial will focus on urban monitoring and analysis with remote sensing and spatial information technology, providing participants with a comprehensive understanding of the subject matter, including recent advancements, methodologies, tools, and practical applications. Attendees will learn about the urban heat island effect and the local climate zone classification system. They will learn how to conduct a local climate zone classification for urban environments. The tutorial will last 3 hours. A 10-minute brief introduction will be given at the beginning. Then four lectures will be arranged, each lasting about 30 minutes, and 10 minutes will be left for Q&A.

Target audience and prerequisites: Good GIS skills, and a basic understanding of remote sensing, image processing, and classification techniques. Basic programming skills (Python, JavaScript). Activated Google Earth Engine account. An activated Google account is required to use Google Colab.

Technical requirements (software, hardware, etc.): Projector, computer, microphone, laser pointer, and other essentials to have QGIS (long term release) installed to carry out the practical part. Participants need to have an activated Google Earth Engine (GEE) account. Participants also need to have an activated Google account to use Google Colab.

In this tutorial, participants will explore GEE’s JavaScript interface (GEE JS) to conduct supervised LULCC, showcasing its analytical strength in spatial analysis. Through a hands-on exercise, participants will not only gain practical experience but also enhance their understanding of key concepts in remote sensing, spatial analysis, and cloud computing. By working with real-world examples, they will learn to apply these skills effectively in diverse contexts.

Objectives and Learning Outcomes: By the end of this tutorial, participants will:

The tutorials’ outlines are listed below:

1. Introduction to Google Earth Engine (GEE)

2. Accessing and preparing Satellite Imagery

3. Working with Training Data

4. Supervised Classification Algorithms

5. Validating and Evaluating the Classification

6. Visualizing and Exporting Results

7. Case Study: LULC Classification of a Specific Region

8. Q&A and Troubleshooting

Target audience and prerequisites: This tutorial is ideal for environmental scientists, practitioners, and researchers involved in Land Use and Land Cover (LULC) analysis, as well as students and professionals in GIS, remote sensing, data science, and spatial data analysis. It is also suitable for those with basic programming skills who wish to expand their knowledge of Google Earth Engine (GEE) and LULC classification techniques. Participants should have a Google Earth Engine account, a foundational understanding of remote sensing and geographic information systems (GIS), and familiarity with basic programming skills

Technical requirements (software, hardware, etc.): Participants need an activated Google Earth Engine (GEE) account, a stable internet connection, a modern web browser, and a computer with 8 GB RAM. Basic programming skills and access to external GIS data files are also recommended.

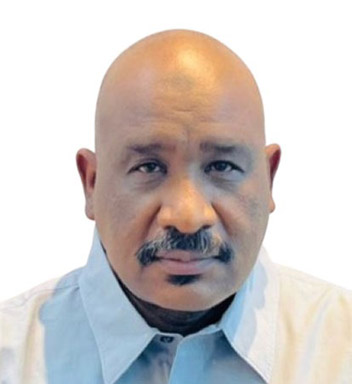

Brief biography of the instructor:

Dr. Rifaat Abdalla is a faculty member in the Department of Earth Sciences at the College of Science, Sultan Qaboos University. With expertise in geospatial science and environmental analysis, Dr. Abdalla has contributed extensively to research in Earth sciences, specializing in remote sensing and land use classification.

Dr. Eltaib Saeed Ganawa is a professor at the Faculty of Geographical and Environmental Sciences at the University of Khartoum, Sudan. Dr. Ganawa’s work focuses on GIS, spatial data analysis, and environmental management, with a strong background in integrating geospatial technology into environmental research.

Dr. Mohammed Mahmoud Musa is a member of the Faculty of Computer Science and Information Technology at Alzaiem Alazhari University, Sudan. His research spans GIS applications, data processing, and remote sensing, applying computational techniques to environmental and geographical studies.

Dr. Anwarelsadat Eltayeb Elmahal also serves at the Faculty of Geographical and Environmental Sciences, University of Khartoum. With a background in geospatial analysis, Dr. Elmahal specializes in environmental assessment and spatial data applications, contributing valuable insights into regional land use and environmental policy.

Overview: In this course, different methods for using Synthetic Aperture Radar (SAR) data processingare introduced. Students will learn how to develop code for using these methods further by using large language models (LLM). It is designed for graduate students in Geographic Information Sciences and Remote Sensing. The course includes the following topics:

Objectives and Learning Outcomes:

Target Audience and Prerequisites: This course is designed for graduate students in Geographic Information Sciences, Remote Sensing, or related fields who have a foundational understanding of SAR remote sensing and image processing. Participants should be comfortable with Python programming, as coding for SAR methods is a core component of the course. Prior exposure to SAR processing, and SAR interferometry is helpful but not required. An interest in using large language models (LLMs) for coding support and processing chain development will also be beneficial.

Technical requirements (software, hardware): Participants need a stable internet connection, a modern web browser (preferably Chrome or Firefox), and a computer with at least 8 GB of RAM. Python programming environment set up is also required for hands-on coding exercises.

Brief introduction of instructor:

Prof. Timo Balz is an expert in remote sensing and Geographic Information Sciences, with a focus on synthetic aperture radar (SAR) technology for surface motion estimation. He has published extensively and contributed to significant research projects in advanced remote sensing methodologies.